Week 10: Normal Mapping

Reading

Monday

Friday recap

The feedback transform buffer demo is working. It was working at the end of class on Friday too, but I was displaying the files from the wrong directory in the browser. Oops.

The feedback transform buffer is one way of modifying geometry data before it reaches the fragment shader. In the native OpenGL pipeline (not WebGL2), there is also support for additional shader stages including Geomety shaders, Tesselation shaders, and Compute Shaders. These stages supplement the core Vertex and Fragment shaders. Given the web nature of this course and the different hardware platforms, we will not be able to explore these advanced topics or CUDA, a more general GPU computing framework for NVIDIA brand GPUs in a Javascript/WebGL2 context.

Depth Cues

Over the weeks this course has explored many ways of increasing realism of computer generated 3D graphics. Even though the final image is projected into a 2D screen, the following features help give us depth cues that we are viewing a 3D scene:

-

Lighting

-

Texturing

-

Perspective projection

-

Depth Buffer/Depth Testing.

This week we will extend this a bit through normal mapping.

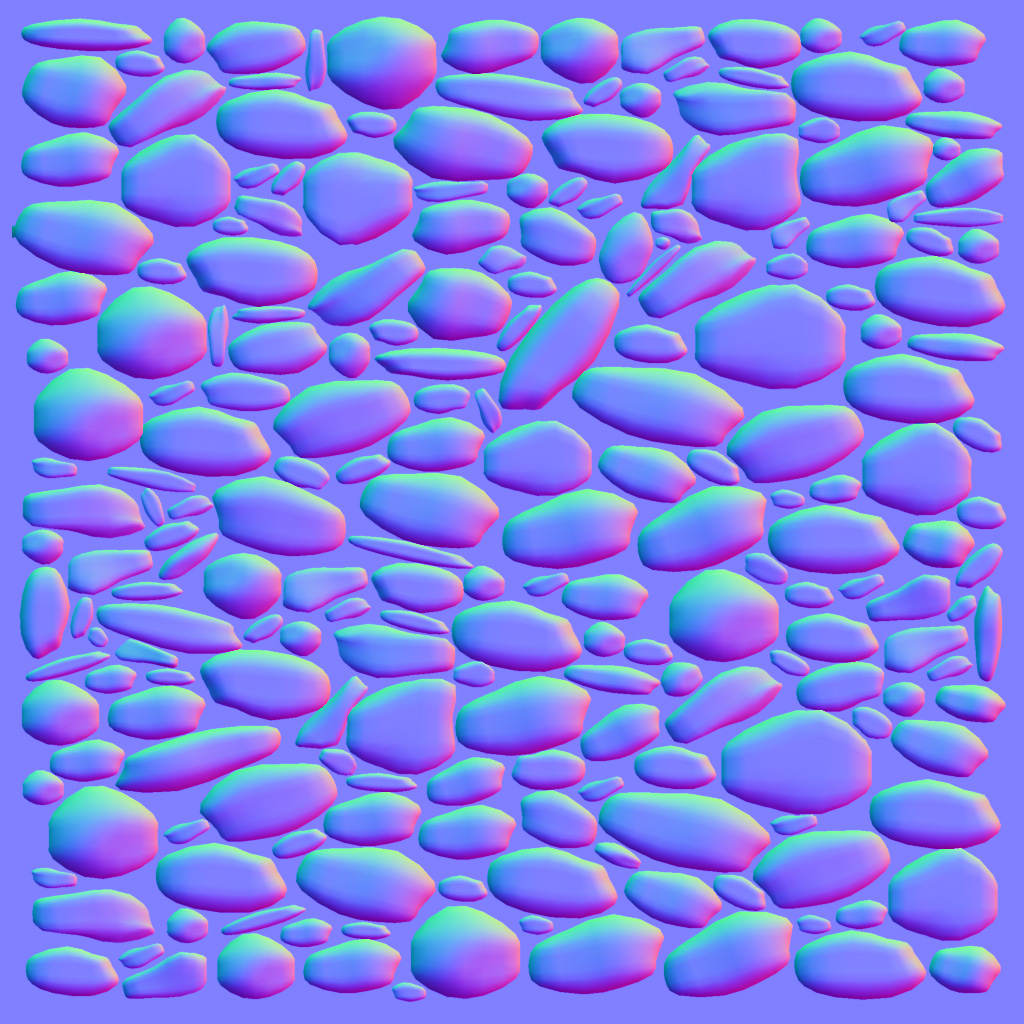

Recall that we sometimes used as a debugging tool the normal to color mapping

vec3 color = 0.5*(normal+vec3(1.));but this is easily invertible

vec3 normal = 2.*color - vec3(1.);if we have normals stored in a color map or texture.

we can modify the existing surface normals specified in the geometry by sampling from the normal map and modifying the normals. This will modify the lighting we see in the scene, even though the underlying geometry is not modified.

Using textures in this way to supplement the geometry can be helpful in other contexts too including ambient occlusion mapping for baking in soft shadows, specular lighting mapping, for highlighting regions that should/should not receive specular light contributions.

SketchFab model with multiple maps.