For this lab, you will use the MRJob library to write MapReduce programs in Python. MRJob allows you to run jobs locally, on a Hadoop cluster, or on Amazon's Elastic MapReduce platform, making it easy to test and deploy MapReduce tasks.

This will be a two-week lab with two parts: a warm-up, requiring a few small applications that can be run locally, and larger application that will process significantly more data on Amazon's cloud.

Rather than cluttering up the department's file system with lots of Python packages, we'll be using a python virtual environment that I've configured for MRJob. To use it, execute the following commands:

source /home/kwebb/public/setup-91.sh workon mrjob

After doing so, you should see (mrjob) at the beginning of your shell prompt. If all went well, you should now be able to "import mrjob" from within python. Note: You'll need to set up your environment this way every time you plan to work with the mrjob library.

These exercises will help you to think about dividing problems into mappers and reducers. The solutions to these will likely be only a handful of lines of code. Use the same mrjob.conf file that we used in the word count example.

Your solution to this part should be in MRGrep.py

If you're not already familiar with grep, it's a Unix command line application that searches the contents of a file for a specified text expression. You can invoke it with:

grep [text to search for] [name(s) of files to search]

For example, if you wanted to find all instances of the word "map" in MRGrep.py, or all instances of "map" in every python file in this directory, you could execute these commands, respectively. (Try them out...)

grep map MRGrep.py grep map *.py

Our MRGrep will behave slightly differently. It will take one or more strings to search for, and it should group the final output according by the matching expression. Strings to search for are specified with the -e flag, which can be passed multiple times:

python MRGrep.py -e [string1] -e [string2] -e [string3] [name(s) of files to search]

For example, if you wanted to search for instances of "agreeable", "Darcy", and "pride" in the text of Pride and Prejudice, you could run:

python MRGrep.py -e agreeable -e Darcy -e pride PrideAndPrejudice.txt > grep-output

You should get a result that looks like this example output.

Note: for your version of grep, you don't need to worry about reporting the file name like the command line version of grep does. We'll look at incorporating file names in the next part.

Your solution to this part should be in MRIndex.py

An inverted index maps words or strings of interest to the files they can be found in. Inverted indices are a key component of search engines.

For our MRIndex, we'll be looking for email addresses and web URLs. Given a list of files, your code should output the file location(s) of every email address or web URL it can find, as in this example output. Your mapper can read the name of the file it's currently processing by querying the environment (see the MRIndex.py starter code).

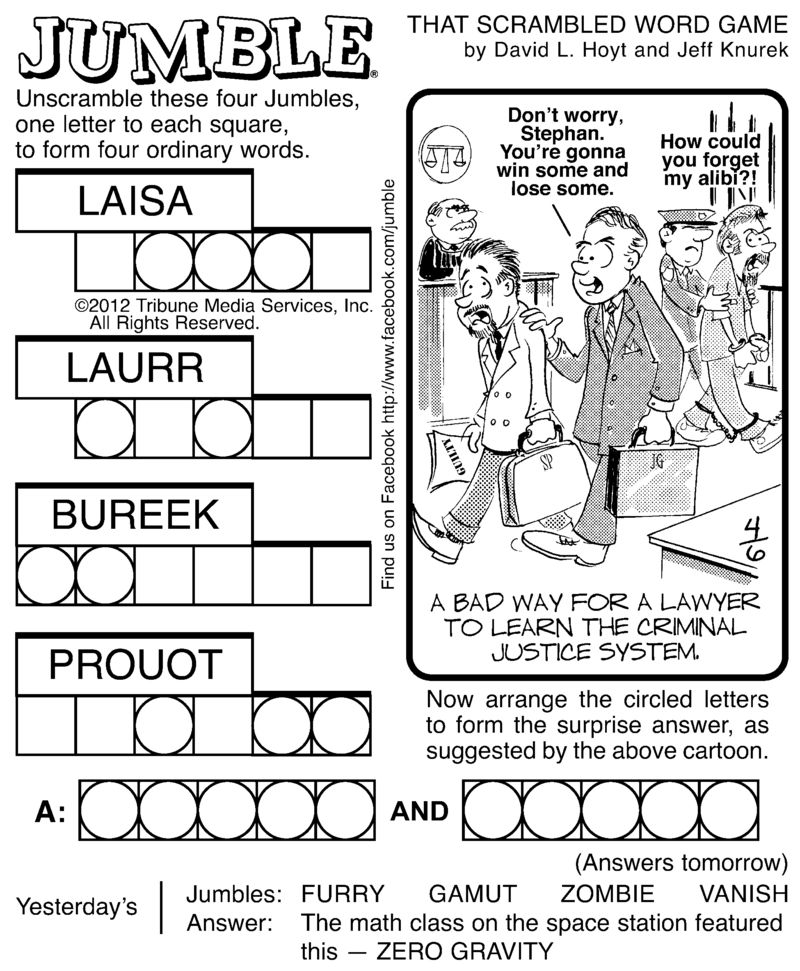

Your solution to this part should be in MRJumble.py

A Jumble is a word puzzle commonly found in newspapers. We're going to use a MapReduce job to make finding anagrams, and thus solving jumbles, easier. You're given a list of words from the Scrabble dictionary (dictionary.txt). Using the list as your input, generate an output file containing: a sequence of sorted letters, the number of words that those letters can produce, and the words themselves. A sequence of sorted letters should appear only once in the output.

For example, the sorted sequence of letters "ACEF" can produce two words, CAFE and FACE, so you should see a line in the output that looks like:

"ACEF" 2 CAFE FACE

Another (much less obvious) example:

"ACEIIMNORST" 3 CREATIONISM MISCREATION ROMANTICISE

Note: your output does NOT have to match this format exactly. It's fine if you have brackets, commas, quote marks, or other additional symbols as long as the order is correct (letters, # of words, words) and it's human-readable enough that I can grade it.

Your solution to this part should be in MRMovies.py

MRJob makes it easy to chain multiple map/reduce tasks together. For this part, we'll make use of this capability to process a large corpus of movie ratings for the purpose of providing recommendations. When you're done, your program will help you decide what to watch on Netflix tonight. For each pair of movies in the data set, you will compute their statistical correlation and cosine similarity (see this blog for a discussion of these and other potential similarity metrics). Since this isn't a statistics class, I've implemented the similarity metrics for you, but you need to provide them with the correct inputs.

For this section of the assignment, we have two input data sets: a small set (details) for testing on your local machine and a large set (details) for running on Amazon's cloud. You can access the data sets at /home/kwebb/public/cs91/lab1/

For both data sets, you will find two input files:

In addition to these two input files, your program should take a few additional arguments:

Please don't attempt to filter down to the movies specified via -m until the final step. Yes, it would be more efficient to do so earlier. I want you to compute the similarities for all movies. The -m argument is there to reduce the output size and make reading (and grading) the output easier. For the others arguments (-k, -l, and -p), you may filter whenever you want.

Since we're computing two similarity metrics, we'll need to combine them into a single similarity value somehow. For your submission, you should blend the values together, using 50% of each. That is, your final value for a pair of movies is 0.5 * the correlation value + 0.5 * the cosine value for the pair. (Feel free to experiment with different weights or similarity metrics, but you should do 50/50 between the two for your submission.)

For each movie selected (-m), sort them from largest to smallest by their blended similarity metric, outputting only the top K (-k) most similar movies that are have at least the minimum blended similarity score (-l). For movies meeting this criteria, you should output:

You may format your output however you like, as long as the values are in the correct order and I can reasonably make sense of it by looking at it briefly.

You may want to take a look at my output from running on the large data set.

You may structure your sequence of map/reduce tasks however you want to solve the problem. I recommend the following sequence of steps:

For the small data set, you can run it locally, just as you did for the warm up exercises. It will probably take a few minutes (5-10) to complete. You can run over the large data set locally too, if you want, but it will take at least an hour (probably closer to two hours). Instead, let's farm it out to Amazon's Elastic MapReduce (EMR) compute platform. There, it'll only take about 20-30 minutes, which is much more reasonable.

To run on Amazon, you'll need to tell MRJob to use "emr" as its runner. You'll also need to give it some basic configuration information. Edit your mrjob.conf with the following contents:

runners:

inline:

base_tmp_dir: /local

emr:

ec2_instance_type: m1.medium

num_ec2_instances: 10

aws_access_key_id: [your access key]

aws_secret_access_key: [your secret key]

aws_region: us-east-1

Now, you should be able to invoke MRJob with "--conf-path mrjob.conf -r emr" to do your processing in the cloud. My sample output has an example of a full command line.

Once you are satisfied with your solution, you can hand it in using the handin91 command from a terminal on any of the CS lab machines.

Only one of you or your partner should run handin91 to submit your joint solutions. If you accidentally both run it, send me email right away letting me know which of the two solutions I should keep and which I should discard.

You may run handin91 as many times as you like, and only the most recent submission will be recorded. This is useful if you realize, after handing in some programs, that you'd like to make a few more changes to them.